In November 2023, fraudsters disguised themselves as a close friend of Mr. Guo from a certain tech company, swindling 4.3 million RMB. In December 2023, parents of a student studying abroad received a ransom video claiming their child was kidnapped, demanding a ransom of 5 million RMB. In January 2024, 19 multinational corporation employees in Hong Kong fell victim to phishing scams, resulting in a loss of 200 million HKD. In February 2024, the AI virus "GoldPickaxe" emerged, capable of stealing facial information and transferring users' bank balances...

According to McKinsey & Company, AI-based identity fraud has become the fastest-growing type of financial crime in the United States and is on the rise globally. Research by the UK's GDG indicates that over 8.6 million people in the UK are using false or others' identities to obtain goods, services, or credit.

A recent report from the US Department of the Treasury titled "Specific AI-Related Custodial Cybersecurity Risks in the Financial Services Industry" emphasizes that the development of AI makes it easier for cybercriminals to use deepfakes to impersonate financial institution customers and access accounts. The report states, "AI can help existing threat actors develop and test more sophisticated malicious software, providing them with complex attack capabilities previously only available to the most resourceful actors. It can also assist less skilled threat actors in developing simple but effective attacks."

The report shows that among various AI threats facing financial institutions, the most significant threats are social engineering attacks and forged identity fraud. On one hand, attackers use generative AI to create more convincing phishing emails, write phishing website content, and deceive financial institution staff. On the other hand, attackers leverage AI to mimic customers' photos, voices, and videos to bypass identity verification processes.

Related statistics indicate that in 2023, fraud incidents based on "AI face swapping" grew by 3000%, and the number of AI-based phishing emails increased by 1000%.

Attacker: Process Record of Utilizing AI Threats

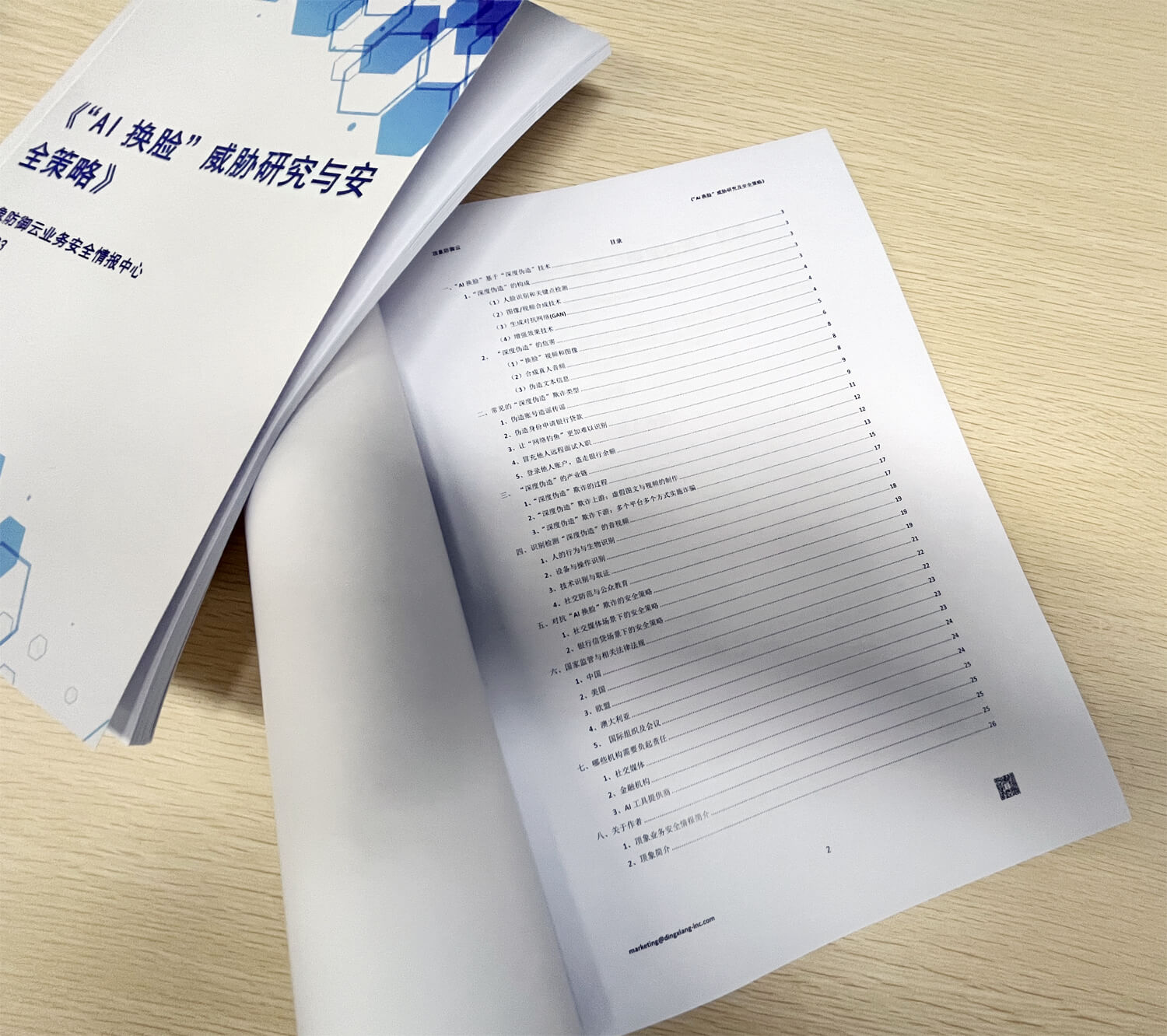

Attackers employ a series of media including social media, emails, remote meetings, online recruitment, news updates, etc., to conduct AI attacks on financial institutions. In a recent intelligence digest titled "AI Face-Swapping Threat Research and Security Strategies" released by the Dingxiang Defense Cloud Business Security Intelligence Center, a detailed analysis of a financial fraud case involving "AI face-swapping" was conducted, outlining the entire process of fraud by the scammers.

Phase One:

Gaining the victim's trust. The fraudsters contact the victim through methods such as text messages, social tools, social media, phone calls, etc. (for example, being able to directly mention the victim's name, family, phone number, workplace, address, ID number, colleagues or partners, and even some experiences), and gain trust.

Phase Two:

Recording the victim's facial information. The fraudsters engage with the victim via social tools, video conferences, video calls, etc., to capture the victim's facial information during the video call (facial expressions, nodding, tilting, opening mouth, blinking, etc.) for the creation of fake videos and portraits using "AI face-swapping" technology. During this process, the victim may also be induced to set up call forwarding or download malicious app software, which can forward or intercept calls from the bank's customer service or calls.

Phase Three

: Logging into the victim's bank account. The fraudsters use the bank's app to log into the victim's bank account, submit the fake video and portrait created through "AI face-swapping" for facial recognition authentication by the bank, and intercept the SMS verification code and risk alerts sent by the bank to the victim's phone.

Phase Four:

Draining the victim's bank balance. The calls from the bank are redirected to a virtual number pre-set by the fraudster, impersonating the victim. After successfully passing the manual verification by the bank's customer service personnel, the balance in the victim's bank card is smoothly transferred out.

Overall, utilizing AI for fraud is divided into the above four steps, each intricately linked. Victims who cannot discern or judge effectively, and platforms that cannot provide efficient warnings and interceptions, are susceptible to falling into the trap set by fraudsters.

Banks: How to Guard Against AI Attacks

This is a new challenge facing the entire industry. Faced with attackers who have industrialized and mature attack patterns, the intelligence digest "AI Face-Swapping Threat Research and Security Strategies" suggests that to prevent and combat AI fraud, it is necessary to effectively identify and detect AI-generated content on one hand, and prevent the exploitation and spread of AI fraud on the other. This requires not only technical countermeasures but also complex psychological warfare and an increase in public safety awareness. Therefore, enterprises need to strengthen digital identity recognition, review account access permissions, and minimize data collection. Additionally, enhancing employee awareness on detecting AI threats is crucial .

.

1. Build a comprehensive security system with multi-channel

annel, full-scenario, and multi-stage protection. Covering various channel platforms and business scenarios, providing security services such as threat perception, security protection, data deposition, model construction, and strategy sharing, capable of meeting different business scenarios, possessing industry-specific strategies, and achieving sedimentation and iteration evolution based on its own business characteristics, realizing precise platform prevention and control. This involves deploying multiple security solutions at different points in the network to protect oneself from various threats, devising comprehensive incident response plans to quickly and effectively respond to AI attacks, and implementing personalized protection

2. Construct an AI-driven security tool system

ystem. A fraud prevention system combining manual review with AI technology to enhance automation and efficiency, detecting and responding to AI-based cyber attacks

3. Strengthen identity verification and protection.

tion. This includes enabling multi-factor authentication, encrypting data at rest and in transit, and implementing firewalls. Strengthen frequent verification for account remote logins, device changes, phone number changes, and sudden activity in dormant accounts to ensure consistency of user identity during usage. Compare and identify device information, geographic location, and behavioral actions to detect and prevent abnormal operations

4. Strengthen account authorization control.

rol. Restrict access to sensitive systems and accounts based on the principle of least privilege to ensure access to resources required by their role, thereby minimizing the potential impact of account hijacking and preventing unauthorized access to your systems and data

5. Continuously stay informed about the latest technologies and threats

reats. Keep abreast of the latest developments in AI technology as much as possible to adjust security measures accordingly. Continuous research, development, and updating of AI models are crucial to maintaining a leading position in an increasingly complex security landscape

6. Continuously educate and train employees on security.

rity. Provide ongoing training on AI technology and its related risks, helping employees identify and avoid AI attacks and other social engineering risks through simulated attacks, vulnerability discovery, security training, etc. Encourage vigilance and prompt reporting of abnormal situations, significantly improving the organization's ability to detect and respond to deepfake threats.

To guard against AI-based threats, effective identification and detection of AI threats are necessary on one hand, while preventing the exploitation and spread of AI fraud on the other. This requires not only technical countermeasures but also complex psychological warfare and an increase in public safety awareness.

Security: Defense Products Against AI Fraud

Traditional security tools and measures are no longer effective in defending against fraud threats brought by AI. To timely identify fraud risks, comprehensive risk prevention and control need to be carried out before, during, and after events. Dingxiang's latest upgraded anti-fraud technology and security products can build a multi-channel, full-scenario, and multi-stage security system for enterprises, effectively combating new threats brought by AI.

Securing App Security to Prevent AI Automation Crackdown

. Dingxiang's adaptive iOS reinforcement, based on graph neural network technology, automatically selects appropriate methods for obfuscation based on the characteristics of different code blocks, significantly increasing the difficulty of reverse analysis and reducing computational overhead by 50%. Through encryption and obfuscation engines, App code can be encrypted, obfuscated, and compressed, greatly increasing the security of App code, effectively preventing products from being cracked, copied, or repackaged by attackers.

Securing Account Security to Prevent AI Malicious Registration and Login

. Dingxiang atbCAPTCHA, based on AIGC technology, prevents AI brute force cracking, automated attacks, and phishing attacks, effectively preventing unauthorized access, account hijacking, and malicious operations, thereby protecting system stability. It integrates 13 verification methods and multiple control strategies, aggregating 4380 risk policies, 112 risk intelligence categories, covering 24 industries and 118 risk types. Its precision control accuracy reaches 99.9%, and it can quickly realize the transformation from risk to intelligence. It also supports seamless verification for secure users and reduces real-time countermeasures to within 60 seconds, further improving the convenience and efficiency of digital login services.

Identifying AI-Forged Devices to Prevent AI Malicious Fraud

. Dingxiang device fingerprinting generates a unified and unique device fingerprint for each device by internally linking multiple device information. It builds a multi-dimensional identification strategy model based on devices, environments, and behaviors to identify risk devices such as virtual machines, proxy servers, and manipulated emulators. It analyzes whether devices have multiple account logins, frequent IP address changes, or frequent device attribute changes, detecting abnormal behaviors or behaviors inconsistent with user habits, tracking and identifying fraudster activities, helping enterprises achieve operational consistency across all channels under the same ID, and assisting in cross-channel risk identification and control.

Unearthing Potential Fraud Threats to Prevent Complex AI Attacks.

Dingxiang Dinsight helps enterprises conduct risk assessment, anti-fraud analysis, and real-time monitoring to improve the efficiency and accuracy of risk control. The average processing speed of Dinsight's daily risk control strategies is within 100 milliseconds, supporting configuration-based access and sedimentation of multi-party data. It can achieve self-performance monitoring and self-iteration of risk control mechanisms based on mature indicators, strategies, models, and deep learning technologies. Paired with the Xintell intelligent model platform, it can automatically optimize security policies for known risks and configure support for risk control strategies for different scenarios based on risk control logs and data mining of potential risks. Its standardized approach to complex data processing, mining, and machine learning processes provides one-stop modeling services from data processing, feature derivation, model construction to final model deployment.

Intercepting "AI Face-Swapping" Attacks to Ensure Facial Application Securit

y. Dingxiang's full-chain, panoramic facial security threat perception solution intelligently verifies multiple dimensions of information such as device environment, facial information, image authenticity, user behavior, and interaction status. It quickly identifies over 30 types of malicious attacks, such as injection attacks, live spoofing, image forgery, camera hijacking, debugging risks, memory tampering, Root/Jailbreak, malicious Rom, and simulator operations, and automatically blocks operations after discovering forged videos, fake face images, or abnormal interactive behavior. It can also flexibly configure the intensity and friendliness of video verification, achieve seamless verification for normal users, and dynamically strengthen verification for abnormal users.