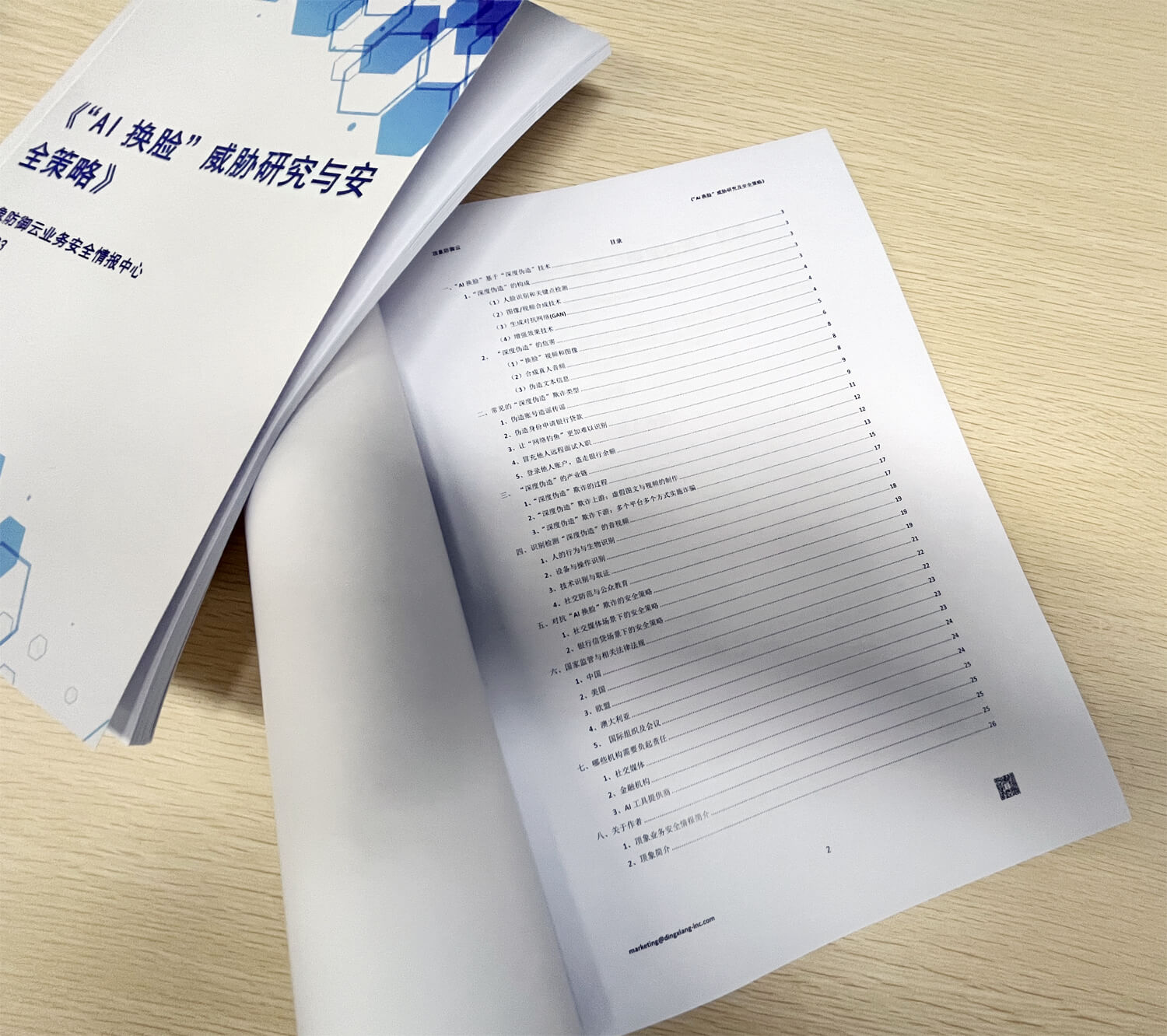

Dingxiang Defense Cloud Business Security Intelligence Center has recently released an intelligence monograph entitled "Research on "Deepfake" Threats and Security Strategies",

which conducts an in-depth study of the currently highly concerned "Deepfake" risks. The monograph consists of 8 chapters and 32 sections, systematically introducing the composition of "Deepfake" threats, the harms of "Deepfake", the process of "Deepfake" fraud, typical "Deepfake" threat patterns, the industry chain behind "Deepfake", the current mainstream "Deepfake" identification and detection strategies, regulatory regulations of various countries on "Deepfake", and the responsibilities that each party needs to bear in "Deepfake" fraud cases.

Fraud: 6 Common Types of "Deepfake" Fraud

1、Fake accounts to spread rumors

With "Deepfake" technology, fraudsters are able to create and distribute highly customized and convincing content that can target individuals based on their online behavior, preferences, and social networks and can be seamlessly integrated into user feeds, facilitating rapid and widespread dissemination. This makes cybercrime more efficient and challenging for users and platforms.

Identifying fake accounts for "Deepfake" is a challenging task because they often involve combinations of real elements (such as real addresses) and fabricated information. This makes detection and prevention extremely difficult. The detection effort is further complicated by using legitimate components and false details. Moreover, because these fraud sexual identities lack a previous credit record or associated suspicious activity, it is difficult to identify them through a conventional fraud detection system.

2、posing as acquaintances to commit fraud

In January 2024, employees of Hong Kong multinational companies were cheated of HK $200 million, and the fraud cases reported by police in Baotou, Inner Mongolia in November 2023 were both fake acquaintances.

The "Deepfake" technology allows fraudsters to easily imitate the video and voice of the target person. These fake video and audio can imitate not only sound, but also intonation, accent and speaking style. In order to further increase the credibility of the fraud, the fraudsters will also obtain the sensitive information of the victims (such as work style, living habits, travel trends, etc., etc.) on social media and public channels, to prove the authenticity of their imposter identity, making it difficult for the victims to distinguish the truth from the false. After obtaining the trust of the victim, and then to the victim of financial funds, trade secrets and other fraud.

3、Fake your identity to apply for bank loans

According to the McKinsey Institute, synthetic identity fraud has become the fastest growing type of financial crime in the United States and is on the rise globally. Indeed, synthetic identity fraud, accounts for 85% of all current fraud behaviors. Moreover, the UK GDG study shows that more than 8.6 million people in the UK use false or other identities to obtain goods, services or credit.

For financial institutions, the "Deepfake" fraud is even more worrying.scammers use "Deepfake" technology to fake information, sounds, videos, pictures, and then combine real and false identity information to create new false identities to open bank accounts or make fraud purchases. What's more, fraudsters can use the "Deepfake" technology to learn different banking businesses and processes, and then quickly launch a fraud on different banks at the same time.

In the future, the bank for the user's credit application, perhaps not only to evaluate " he / she is suitable for credit 100,000? Or $200,000?", also need to tell" this loan applicant is human? Or Artificial intelligence?", 92% of banks are expected to face a" Deepfake " fraud threat.

4、Make "phishing" even more difficult to identify

Phishing has long been a prominent topic in security, and despite such forms of fraud for decades, it is still one of the most effective ways for fraud to attack or penetrate organizations. Fraudsters based on social engineering principle, by email, website, and phone calls, SMS and social media, using human nature (such as impulse, discontent, curiosity), as trusted entities, induce the victims click on false links, download malicious software, induced transfer funds, provide sensitive data such as account password behavior.

With the development of technology, Internet telephers are also changing their strategies, especially with the help of AI, where fraudsters use "Deepfake" technology to deceive victims, making phishing attacks more complex and extremely difficult to detect. In 2023, the deep forgery phishing fraud incidents surged by 3,000%.

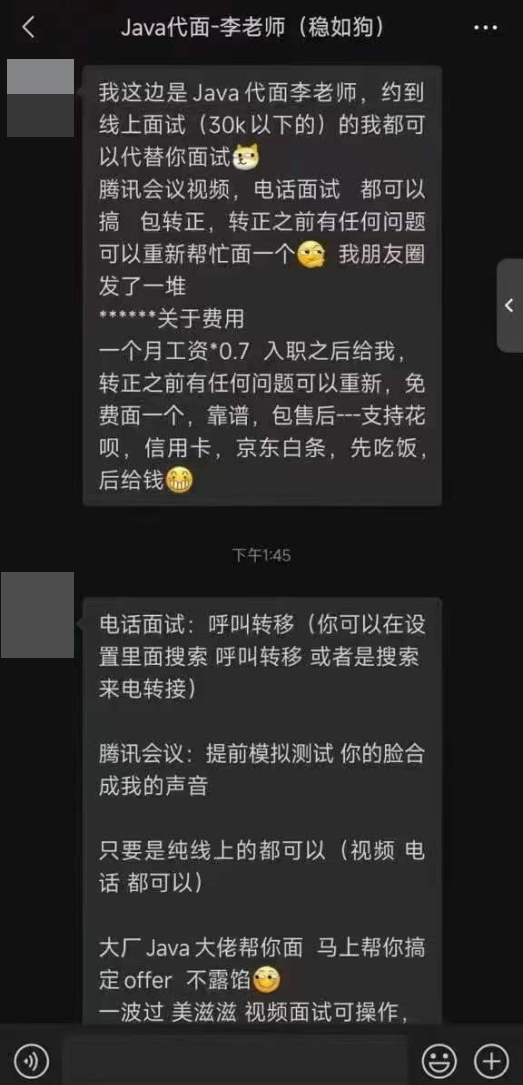

5、pretend to be others remote interview entry

In July 2022, the FEDERAL Bureau of Investigation (FBI) warned that a growing number of fraudsters are using "Deepfake" technology to pose as job seekers in remote job interviews to defraud salaries and steal business secrets.

The FBI did not specify the ultimate goal. But the agency noted that the fake interviewers, if successful and hired, will be allowed to successfully access sensitive data such as "customer PII (personal identity information), financial data, corporate IT database and / or proprietary information."

The FBI also said stolen personal information from some of the victims of the scam had been used to conduct remote interviews and had been used to conduct pre-employment background checks with other applicants' profiles.

6、fake login to steal the bank balance

On February 15,2024, the foreign security company Group-IB announced that it had found a malware named "GoldPickaxe". The iOS version of the malware lured users into face recognition, submitting identity documents, and then making deep forgery based on the user's face information.

Through the deeply forged fake face video, fraud molecules can log in the user's bank account, intercept the mobile phone verification message sent by the bank, and can transfer money, consume, change the account password, etc.

Process: The "Deepfake" Deception Process Consists of Four Main Steps

With Dingxiang defense cloud business security intelligence center to intercept a "Deepfake" financial fraud cases, for example, fraud molecules fraud process mainly has four stages, "Deepfake" technology is just a key factor in the process of fraud link, other links, the victim if unable to identify and judgment, it is easy to fraud molecular instructions step by step into the trap.

The first stage, to cheat the victim's trust.

Fraudsters contact the victim through text messages, social tools, social media, phone calls, etc., etc. (for example, can directly tell the name of the victim's name, home, phone, unit, address, ID number, colleagues or partners, or even some experiences) and gain trust.

The second stage, steal the victim's face.

Fraudsters make video calls with victims through social networking tools, video conferences, video calls, and other methods. During the video call, they obtain the victim's face information (face, bow, turning, mouth, blink and other key information) to make "Deepfake" fake videos and portrait production. During this period, victims will also be induced to set up phone call transfer or induce victims to download malicious App software, which can call transfer or intercept the bank's customer service phone or phone.

The third stage, log in to the victim's bank account.

The fraudsters log in the victim's bank account through the bank App, submit the fake videos and fake portraits made by "Deepfake", pass the bank's face recognition authentication, and intercept the mobile phone SMS verification code and risk tips sent by the bank on the victim's mobile phone.

The fourth stage, transfer the victim's bank balance.

The bank calls the manual access phone call to the virtual number set by the fraudsters, posing as the victim, through the manual verification of the bank customer service staff, and finally successfully transferred the balance of the victim's bank card.

"Deepfake" danger is not only to generate false video and images, but also contributed to the whole fraud ecosystem: a robot, false accounts and anonymous service of intricate network operation, all of these are designed to make, enlarge and distribute fabricated information and content, with difficult identification, detection and traceability problems.

Identification is difficult. Has developed to the point of convincingly generating realistic personal simulations, making it increasingly difficult to distinguish between true and false content to identify unless specifically trained, realizing that this threat is the first step in defending against it.

Detection is difficult. To improve the quality of "Deepfake", detection is a big problem. Not only the naked eye can not effectively identify, some conventional detection tools can not be found in time.

Traceability is difficult. There is no digital fingerprint, simulator forged address, false IP address, false device information, no clear digital clues to follow, or even no direct malicious software signature that can be detected, and traditional network security measures cannot effectively protect them.

It is a form of threat in the digital age, in which attackers are invisible and elusive, who not only produce information but also manipulate the realistic structure perceived by each participant. Therefore, to combat "Deepfake" fraud, on the one hand, it needs to identify and detect fake videos, pictures and information (effectively identify fake content); on the other hand, it is necessary to identify and detect "Deepfake" mode channels and platform networks (to improve the security of digital accounts in many aspects). This requires not only technical countermeasures, but also complex psychological warfare and the promotion of public safety awareness. sychological warfare and the promotion of public safety awareness.